textX is a meta-language (i.e. a language for language definition) for domain-specific language (DSL) specification in Python.

In a nutshell, textX will help you build your textual language in an easy way. You can invent your own language or build a support for an already existing textual language or file format.

From a single grammar description, textX automatically builds a meta-model (in the form of Python classes) and a parser for your language. The parser will parse expressions of your language and automatically build a graph of Python objects (i.e. the model) corresponding to the meta-model.

textX is inspired by Xtext - a Java based language workbench for building DSLs with full tooling support (editors, debuggers etc.) on the Eclipse platform. If you like Java and Eclipse check it out. It is a great tool.

A video tutorial for textX installation and implementation of a simple data modeling language is below.

For a not-so-basic video tutorial check out State Machine video tutorial.

For an introduction to DSLs in general here are some references:

- Federico Tomassetti: The complete guide to (external) Domain Specific Languages.

- Pierre Bayerl: self-dsl.

For an in-depth coverage on the subject we recommend the following books:

- Voelter, Markus, et al. DSL engineering: Designing, implementing and using domain-specific languages. dslbook.org, 2013.

- Kelly, Steven, and Juha-Pekka Tolvanen. Domain-specific modeling: enabling full code generation. John Wiley & Sons, 2008.

Feature highlights

-

Meta-model/parser from a single description

A single description is used to define both language concrete syntax and its meta-model (a.k.a. abstract syntax). See the description of grammar and metamodel.

-

Automatic model (AST) construction

Parse tree will automatically be transformed to a graph of python objects (a.k.a. the model). See the model section.

Python classes will be created by textX but, if needed, user supplied classes may be used. See custom classes.

-

Automatic linking

You can have references to other objects in your language and the textual representation of the reference will be resolved to the proper python reference automatically.

-

Automatic parent-child relationships

textX will maintain a parent-child relationships imposed by the grammar. See parent-child relationships.

-

Parser control

Parser can be configured with regard to case handling, whitespace handling, keyword handling etc. See parser configuration.

-

Model/object post-processing

A callbacks (so called processors) can be registered for models and individual classes. This enables model/object postprocessing (validation, additional changes etc.). See processors section.

-

Grammar modularization - imports

Grammar can be split into multiple files and then files/grammars can be imported where needed. See Grammar modularization.

-

Scope Providers

Scope Providers allow different types of scoping. See Scoping.

-

Multi-meta-model support

Different meta-models can be combined. Typically some of these meta-models extend other meta-models (grammar modularization) and reference each other. Special scope providers support file-extension-based allocation of model files to meta models. See Multi meta-model support

-

Meta-model/model visualization

Both meta-model and parsed models can be visulized using GraphViz software package. See visualization section.

Installation

$ pip install textX[cli]

Previous command requires pip to be installed.

Also, notice the use of [cli] which means that we would like to use CLI

textx command. If you just want to deploy your language most probably you

won't need CLI support.

To verify that textX is properly installed run:

$ textx

You should get output like this:

Usage: textx [OPTIONS] COMMAND [ARGS]...

Options:

--debug Debug/trace output.

--help Show this message and exit.

Commands:

check Check/validate model given its file path.

generate Run code generator on a provided model(s).

list-generators List all registered generators

list-languages List all registered languages

version Print version info.

To install development (master branch) version:

$ pip install --upgrade https://github.com/textX/textX/archive/master.zip

Python versions

textX works with Python 3.8+. Other versions might work but are not tested.

Getting started

See textX Tutorials to get you started:

- Hello World

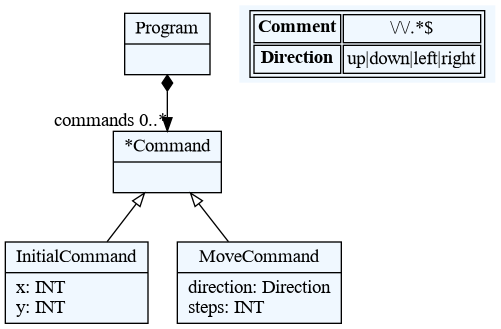

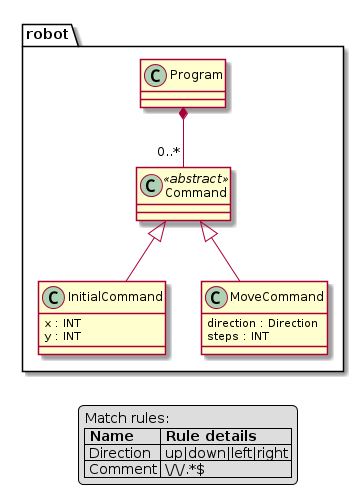

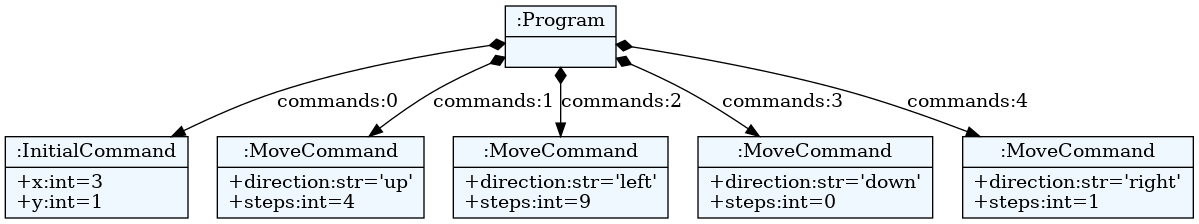

- Robot

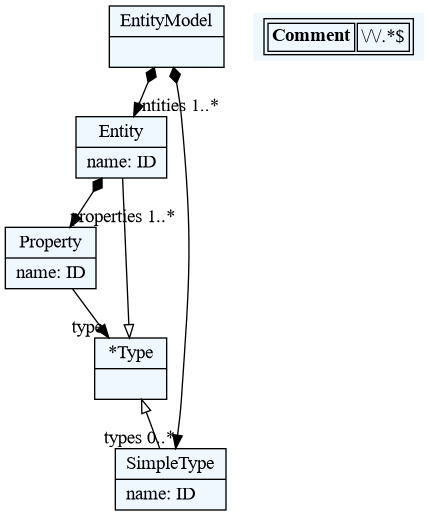

- Entity

- State Machine - video tutorial

- Toy language compiler

- self-dsl

For specific information read various User Guide sections.

You can also try textX in our playground. There is a dropdown with several examples to get you started.

A full example project that shows how multi-meta-modeling feature can be used is also available in a separate git repository.

To create the initial layout of your project quickly take a look at project scaffolding.

Discussion and help

For general questions and help please use

StackOverflow. Just make

sure to tag your question with the textx tag.

For issues, suggestions and feature request please use GitHub issue tracker.

Projects using textX

Here is a non-complete list of projects using textX.

-

Open-source

- pyecore - ECore implementation in Python. Vincent Aranega is doing a great work on integrating textX with pyecore. The idea is that the integration eventually gets merged to the main textX repo. For now, you can follow his work on his fork of textX.

- pyTabs - A Domain-Specific Language (DSL) for simplified music notation

- applang - Textual DSL for generating mobile applications

- pyFlies - A DSL for designing experiments in psychology

- ppci - Pure python compiler infrastructure.

- Expremigen - Expressive midi generation

- fanalyse - Fortran code parser/analyser

- Silvera - A DSL for microservice based software development

- cutevariant - A standalone and free application to explore genetics variations from VCF file. Developed by labsquare - A community for genomics software

- osxphotos - Python app to export pictures and associated metadata from Apple Photos on macOS.

- StrictDoc - Software for technical documentation and requirements management.

-

Commercial

- textX is used as a part of Typhoon-HIL's schematic editor for the description of power electronic and DSP schemes and components.

If you are using textX to build some cool stuff drop me a line at igor dot dejanovic at gmail. I would like to hear from you!

Editor/IDE support

Visual Studio Code support

There is currently an ongoing effort to build tooling support around Visual Studio Code. The idea is to auto-generate VCS plugin with syntax highlighting, outline, InteliSense, navigation, visualization. The input for the generator would be your language grammar and additional information specified using various DSLs.

Projects that are currently in progress are:

-

textX-LS - support for Language Server Protocol and VS Code for any textX based language.

-

textx-gen-coloring - a textX generator which generates syntax highlighting configuration for TextMate compatible editors (e.g. VSCode) from textX grammars.

-

textx-gen-vscode - a textX generator which generates VSCode extension from textX grammar.

-

viewX - creating visualizers for textX languages

Stay tuned ;)

Other editors

If you are a vim editor user check out support for vim.

For emacs there is textx-mode which is also available in MELPA.

You can also check out textX-ninja project. It is currently unmaintained.

Citing textX

If you are using textX in your research project we would be very grateful if you cite our paper [Dejanovic2017].

@article{Dejanovic2017,

author = {Dejanovi\'{c}, I. and Vaderna, R. and Milosavljevi\'{c}, G. and Vukovi\'{c}, \v{Z}.},

doi = {10.1016/j.knosys.2016.10.023},

issn = {0950-7051},

journal = {Knowledge-Based Systems},

pages = {1--4},

title = {{TextX: A Python tool for Domain-Specific Languages implementation}},

url = {http://www.sciencedirect.com/science/article/pii/S0950705116304178},

volume = {115},

year = {2017}

}

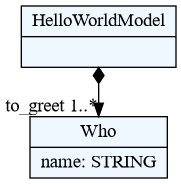

Hello World example

This is an example of very simple Hello World like language.

A .tx file extension is used for textX grammar. See textX

grammar on what you can do inside a grammar file, including

comments!

These are the steps to build a very basic Hello World - like language.

-

Write a language description in textX (file

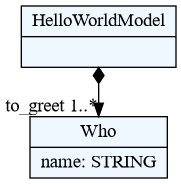

hello.tx):HelloWorldModel: 'hello' to_greet+=Who[','] ; Who: name = /[^,]*/ ;Description consists of a set of parsing rules which at the same time describe Python classes that will be dynamically created and used to instantiate objects of your model. This small example consists of two rules:

HelloWorldModelandWho.HelloWorldModelstarts with the keywordhelloafter which a one or moreWhoobject must be written separated by commas.Whoobjects will be parsed, instantiated and stored in ato_greetlist on aHelloWorldModelobject.Whoobjects consists only of its names which must be matched the regular expression rule/[^,]*/(match non-comma zero or more times). Please see textX grammar section for more information on writing grammar rules. -

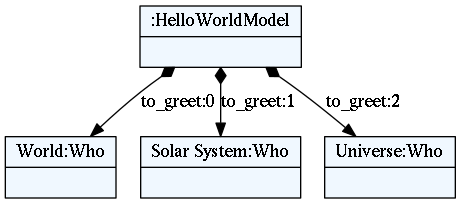

At this point you can check and visualise meta-model using following command from the command line:

$ textx generate hello.tx --target dot Generating dot target from models: /home/igor/repos/textX/textX/examples/hello_world/hello.tx -> /home/igor/repos/textX/textX/examples/hello_world/hello.dot To convert to png run "dot -Tpng -O hello.dot"

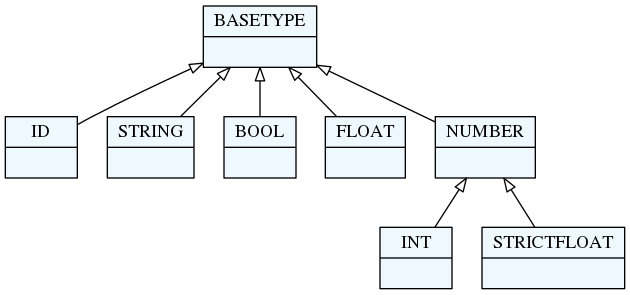

You can see that for each rule from language description an appropriate Python class has been created. A BASETYPE hierarchy is built-in. Each meta-model has it.

-

Create some content (i.e. model) in your new language (

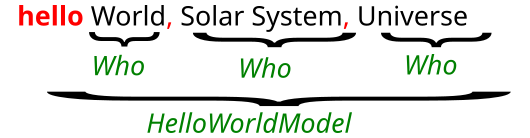

example.hello):hello World, Solar System, UniverseYour language syntax is also described by language rules from step 1.

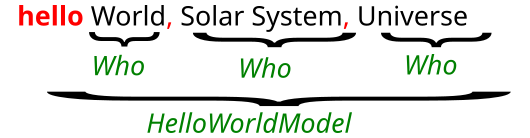

If we break down the text of the example model it looks like this:

We see that the whole line is a

HelloWorldModeland the partsWorld,Solar System, andUniverseareWhoobjects. Red coloured text is syntactic noise that is needed by the parser (and programmers) to recognize the boundaries of the objects in the text. -

To use your models from Python first create meta-model from textX language description (file

hello.py):from textx import metamodel_from_file hello_meta = metamodel_from_file('hello.tx') -

Than use meta-model to create models from textual description:

hello_model = hello_meta.model_from_file('example.hello')Textual model

example.hellowill be parsed and transformed to plain Python objects. Python classes of the created objects are those defined by the meta-model. Returned objecthello_modelwill be a reference to the root of the model, i.e. the object of classHelloWorldModel. You can use the model as any other Python object. For example:print("Greeting", ", ".join([to_greet.name for to_greet in hello_model.to_greet])) -

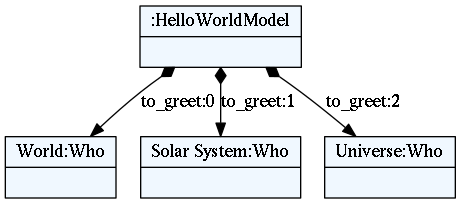

You can optionally export model to

dotfile to visualize it. Run following from the command line:$ textx generate example.hello --grammar hello.tx --target dot Generating dot target from models: /home/igor/repos/textX/textX/examples/hello_world/example.hello -> /home/igor/repos/textX/textX/examples/hello_world/example.dot To convert to png run "dot -Tpng -O example.dot"

This is an object graph automatically constructed from

example.hellofile.We see that each

Whoobject is contained in the python attributeto_greetof list type which is defined by the grammar. -

Use your model: interpret it, generate code … It is a plain Python graph of objects with plain attributes!

Try out a complete tutorial for building a simple robot language.

textX grammar

The language syntax and the meta-model are defined by the textX grammar in the form of a set of textX rules.

Rules

The basic building blocks of the textX language are rules. Each rule is written in the following form:

Hello:

'hello' who=ID

;

This rule is called Hello. After the rule name, there is a colon. The body of

the rule is given as a textX expression, starting at the colon and ending with a

semicolon. This rule tells us that the pattern of Hello objects in input

strings consists of the string literal hello, followed by the ID rule (here ID

is a reference to a built-in rule, more about this in a moment).

These are valid Hello objects:

hello Alice

hello Bob

hello foo1234

Rule Hello at the same time defines a Python class Hello. When the rule is

recognized in the input stream, an object of this class will get created and the

attribute who will be set to whatever the rule ID has matched after the word

hello (this is specified by the assignment who=ID).

Of course, there are many more rule expressions than those shown in this small example. In the next section, a detailed description of each textX expression is given.

textX base types

In the previous example you have seen an ID rule. This rule is one of the

built-in rules that form the base of textX's type system. Base types/rules are

depicted in the following figure:

IDrule: matches a common identifier consisting of letters, digits and underscores. The regex pattern that describe this rule is'[^\d\W]\w*\b'. This match will be converted to a Python string.INTrule: matches an integer number. This match will be converted to a Pythonintinstance.FLOATrule: will match a floating point number. This match will be converted to a Pythonfloatinstance ('FLOAT' is a direct subtype of 'BASETYPE'; in order to distinguish floats and ints, 'STRICTFLOAT' was introduced).STRICTFLOATrule: will match a floating point number. This match will be converted to a Pythonfloatinstance. A 'STRICTFLOAT' will not match an 'INT' (without "." or "e|E"). A 'NUMBER' is either a 'STRICTFLOAT' or an 'INT', and will, thus, be converted to a float or an int, respectively.BOOLrule: matches the wordstrueorfalse. This match will be converted to a Pythonboolinstance.STRINGrule: matches a quoted string. This match will be converted to a Pythonstrinstance.

textX base types are automatically converted to python types during object instantiation. See auto-initialization for more information.

Rule expressions

Rule expressions represent the body of a rule. They is specified using basic expressions and operators.

The basic expressions are:

- Matches

- String match (

'...'or"...") - Regex match (

/.../)

- String match (

- Sequence

- Ordered choice (

|) - Optional (

?) - Repetitions

- Zero or more (

*) - One or more (

+) - Unordered group (

#)

- Zero or more (

- References

- Match reference

- Link reference (

[..])

- Assignments

- Plain (

=) - Boolean (

?=) - Zero or more (

*=) - One or more (

+=)

- Plain (

- Syntactic predicates

- Not (

!) - negative lookahead - And (

&) - positive lookahead

- Not (

- Match suppression

Matches

Match expressions are, besides base type rules, the expressions at the lowest level. They are the basic building blocks for more complex expressions. These expressions will consume input on success.

There are two types of match expressions:

-

String match - is written as a single quoted string. It will match a literal string on the input.

Here are a few examples of string matches:

'blue' 'zero' 'person' -

Regex match - uses regular expression defined inside

/ /to match the input. Therefore, it defines a whole class of strings that can be matched. Internally a pythonremodule is used.Here are few example of regex matches:

/\d*/ /\d{3,4}-\d{3}/ /[-\w]*\b/ /[^}]*/For more information on Regular Expression in Python see Regular Expression HOWTO.

When the metamodel has the option

use_regexp_groupset toTrue, then a regular expression with exactly one group is replaced by the group. This can be used to define multiline strings to be stored in the model without the surrounding limiters:Model: 'data' '=' data=/(?ms)\"{3}(.*?)\"{3}/;An example model could be

data = """ This is a multiline text! """When creating a metamodel with this grammar and the option

use_regexp_groupenabled, a multiline string delimited with"""is accepted:(?ms)activates the multiline option and the dot matches everything option.\"{3}matches the delimited""". The pattern"(.*?)is a non-greedy variant of get anything.metamodel = metamodel_from_str(grammar, use_regexp_group=True)

Sequence

Sequence is a textX expression that is given by just writing contained sub-expressions one after another. For example,the following rule:

Colors:

"red" "green" "blue"

;

is defined as a sequence consisting of three string matches (red green and

blue). Contained expressions will be matched in the exact order they are

given. If some of the expressions do not match, the sequence as a whole will

fail. The above rule defined by the sequence will match only the following

string:

red green blue

If whitespace skipping is enabled (it is by default), arbitrary whitespaces can occur between matched words.

Ordered choice

Ordered choice is given as a set of expression separated by the| operator.

This operator will try to match contained expression from left to right and the

first match that succeeds will be used.

Example:

Color:

"red" | "green" | "blue"

;

This will match either red or green or blue and the parser will try to

match the expressions in that order.

In most classic parsing technologies an unordered match (alternative) is used. This may lead to ambiguous grammars where multiple parse tree may exist for the same input string.

Underlying parsing technology of textX

is Arpeggio which is a parser based

on PEG grammars and thus the | operator directly translates to Arpeggio's PEG

ordered choice. Using ordered choice yields unambiguous parsing. If the text

parses there is only one possible parse tree.

Optional

Optional is an expression that will match the contained expression if that is

possible, but will not fail otherwise. Thus, optional expression always

succeeds.

Example:

MoveUp:

'up' INT?

;

INT match is optional in this example. This means that the up keyword is

required, but the following integer may or may not be found.

Following lines will match:

up 45

up 1

up

Optional expressions can be more complex. For example:

MoveUp:

'up' ( INT | FLOAT )?

Now, an ordered choice in the parentheses is optional.

Repetitions

-

Zero or more repetition is specified by the

*operator and will match the contained expression zero or more times. Here is an example:Colors: ("red"|"green"|"blue")* ;In this example zero or more repetition is applied on the ordered choice. In each repeated match one color will be matched, trying from left to right. Thus,

Colorsrule will match color as many times as possible, but will not fail if no color exists in the input string. The following would be matched by theColorsrule:red blue greenbut also:

red blue blue red red greenor an empty string.

-

One or more repetition is specified by

+operator and will match the contained expression one or more times. Thus, everything that is written for zero or more applies here except that at least one match must be found for this expression to succeed. Here is an above example modified to match at least one color:Colors: ("red"|"green"|"blue")+ ; -

Unordered group is a special kind of a sequence. Syntactically it is similar to a repetition. It is specified by the

#operator and must be applied to either sequences or ordered choices. This operator will match each element of the sequence or the ordered choice in an arbitrary order:Colors: ("red" "green" "blue")# ;For the previous example all following lines are valid:

red blue green red green blue blue green red ...But, the following lines are not valid:

red blue red green blue greenConsider this example:

Modifier: (static?='static' final?='final' visibility=Visibility)# ; Visibility: 'public' | 'private' | 'protected';We want to provide modifiers to the type declarations in our language. Furthermore, we want modifiers to be written in any order.

The following lines will match (thanks to

?=operator, only visibility must be specified):public public static final protected static ...You can combine unordered groups with parenthesized groups. Lets look at the following example:

Unordered: (('first' 'second') 'third')# ;This will match group with sequence

first secondandthirdin arbitrary order but the sequencefirst secondmaintains the order. Thus, these inputs will match:first second third third first secondBut these won't:

third second first second first thirdAlternatively, you can use ordered choice instead of grouping. This will be equivalent with the previous example:

Unordered: ('first' 'second' | 'third')# ;

Unordered group may also have repetition modifiers defined.

Assignments

Assignments are used as a part of the meta-model deduction process. Each assignment will result in an attribute of the meta-class created by the rule.

Each assignment consists of the LHS (left-hand side) and the RHS (right-hand side). The LHS is always a name of the meta-class attribute while the RHS can be a reference to other rules (either a match or a link reference) or a simple match (string or regex match). For example:

Person:

name=Name ',' surname=Surname ',' age=INT ',' height=INT ';'

;

The Name and Surname rules referenced in the RHS of the first two

assignments are not given in this example.

This example describes the rule and meta-class Person, that will parse and

instantiate the Person objects with these four attributes:

name- which will use the ruleNameto match the input, it will be a reference to the instance of theNameclass,surname- will useSurnamerule to match the input,age- will use the built-in typeINTto match a number from the input string.agewill be converted to the pythoninttype.height- the same asage, but the matched number will be assigned to theheightattribute of thePersoninstance.

Notice the comma as the separator between matches and the semicolon match at the end of the rule. Those matches must be found in the input but the matched strings will be discarded. They represent a syntactic noise.

If the RHS is one of textX BASETYPEs, then the matched string will be converted

to some of the plain python types (e.g. int, string, boolean).

If the RHS is a string or regex match like in this example:

Color:

color=/\w+/

;

then the attribute given by the LHS will be set as the string matched by the RHS regular expression or string.

If the RHS is a reference to some other rule, then the attribute given by the LHS will be set to refer to the object created by the RHS rule.

Following strings are matched by the Person rule from above:

Petar, Petrovic, 27, 185;

John, Doe, 34, 178;

There are four types of assignments:

-

Plain assignment (

=) will match its RHS once and assign what is matched to the attribute given by the LHS. The above example uses plain assignments.Examples:

a=INT b=FLOAT c=/[a-Z0-9]+/ dir=Direction -

Boolean assignment (

?=) will set the attribute toTrueif the RHS match succeeds and toFalseotherwise.Examples::

cold ?= 'cold' number_given ?= INT -

Zero or more assignment (

*=) - LHS attribute will be alist. This assignment will keep matching the RHS as long as the match succeeds and each matched object will be appended to the attribute. If no match succeeds, the attribute will be an empty list.Examples::

commands*=Command numbers*=INT -

One or more assignment (

+=) - same as the previous assignment, but it must match the RHS at least once. If no match succeeds, this assignment does not succeed.

Multiple assignment to the same attribute

textX allows for multiple assignments to the same attribute. For example:

MyRule:

a=INT b=FLOAT a*=ID

;

Here a attribute will always be a Python list. The type of a will be

OBJECT as the two assignments have declared different types for a (INT and

ID).

Consider this example:

Method:

'func(' (params+=Parameter[','])? ')'

;

Parameter:

type=ID name=ID | name=ID

;

In Parameter rule, the name attribute assignments are part of different

ordered choice alternatives and thus name will never have more than one value

and thus should not be a list. The type of name is consistent in both

assignments so it will be ID.

The rule of the thumb for multiple assignments is that if there is no possibility for an attribute to collect more than one value during parsing it will be a single value object, otherwise it will be a list.

References

Rules can reference each other. References are usually used as a RHS of the assignments. There are two types of rule references:

-

Match rule reference - will call another rule. When instance of the called rule is created, it will be assigned to the attribute on the LHS. We say that the referred object is contained inside the referring object (i.e. they form a parent-child relationship).

Example::

Structure: 'structure' '{' elements*=StructureElement '}' ;StructureElementwill be matched zero or more times. With each match, a new instance of theStructureElementwill be created and appended to theelementspython list. Aparentattribute of eachStructureElementwill be set to the containingStructure. -

Link rule reference - is written as a referred rule name inside square brackets. It will match an identifier of some class object at the given place and convert that identifier to a Python reference to the target object. This reference resolving is done automatically by textX. By default, a

nameattribute is used as the identifier of the object. By default, all objects of the same class are in a single namespace. This can be configured by scope providers and Reference Resolving Expression Language.Example:

ScreenType: 'screen' name=ID "{" '}' ; ScreenInstance: 'screen' type=[ScreenType] ;The

typeattribute is a link to theScreenTypeobject. This is a valid usage:// This is a definition of the ScreenType object screen Introduction { } // And this is a reference link to the ScreenType object defined above // ScreenInstance instance screen IntroductionIntroductionwill be matched, theScreenTypeobject with that name will be found and thetypeattribute ofScreenInstanceinstance will be set to it.IDrule is used by default to match the link identifier. If you want to change that, you can use the following syntax:ScreenInstance: 'screen' type=[ScreenType:WORD] ;Here, instead of

IDaWORDrule is used to match the object's identifier.

Attributes with name names are used for reference auto-resolving. By default,

a dict lookup is used, thus they must be of a hashable type. See issues

#40 and

#266.

A usual error is to match the name in this fashion:

MyObj: name+=ID['.'];

Here, name will be a list of strings that are separated by dot and that will

not work as the name must be hashable. The best way to implement this and make

name hashable is:

MyObj: name=FQN;

FQN: ID+['.'];

Now, name will be the string returned by the FQN match rule.

Syntactic predicates

Syntactic predicates are operators that are used to implement lookahead. The lookahead is used to do parsing decision based on the part of the input ahead of the current position. Syntactic predicates are written as a prefix of some textX rule expression. The rule expression will be used to match input ahead of the current location in the input string. It will either fail or succeed but will never consume any input.

There are two type of syntactic predicates:

-

Not - negative lookahead (

!) - will succeed if the current input doesn't match the expression given after the!operator.Example problem:

Expression: Let | ID | NUMBER; Let: 'let' expr+=Expression 'end' ;In this example we have nested expressions built with indirectly recurssive

Letrule. The problem is that theIDrule fromExpressionwill match keywordendand thus will consume end ofLetrule, so the parser will hit EOF without completing anyLetrules. To fix this, we can specify thatIDwill match any identifier except keywordsletandendlike this:Expression: Let | MyID | NUMBER; Let: 'let' expr+=Expression 'end' ; Keyword: 'let' | 'end'; MyID: !Keyword ID;Now,

MyIDwill matchIDonly if it is not a keyword. -

And - positive lookahead (

&) - will succeed if the current input starts with the string matched by the expression given after the&operator.Example:

Model: elements+=Element ; Element: AbeforeB | A | B ; AbeforeB: a='a' &'b' // this succeeds only if 'b' follows 'a' ; A: a='a'; B: a='b';Given the input string

a a a bfirst twoachars will be matched by the ruleA, but the thirdawill be matched by the ruleAbeforeB. So, even whenAbeforeBmatches onlyaand is tried before any other match, it will not succeed for the first twoachars because they are not followed byb.

Match suppression

Sometimes it is necessary to define match rules that should return only parts of

the match. For that we use match the suppression operator (-) after the

expression you want to suppress.

For example:

FullyQualifiedID[noskipws]:

/\s*/-

QuotedID+['.']

/\s*/-

;

QuotedID:

'"'?- ID '"'?-

;

Because we use noskipws rule modifier, FullyQualifiedID does not skip

whitespaces automatically. Thus, we have to match whitespaces ourself, but we

don't want those whitespaces in the resulting string. You might wonder why we

are using noskipws. It is because we do not want whitespaces in between each

QuotedID match. So, for example, first. second shouldn't match but

first.second should.

In the rule FullyQualifiedID we are suppressing whitespace matches /\s*/-.

We also state in QuotedID that there are optional quotation marks around each

ID, but we don't want those either '"'?-.

Given this input:

first."second".third."fourth"

FullyQualifiedID will return:

first.second.third.fourth

Repetition modifiers

Repetition modifiers are used for the modification of the repetition expressions

(*, +, #, *=, +=). They are specified in brackets [ ]. If there are

more modifiers, they are separated by a comma.

Currently, there are two modifiers defined:

-

Separator modifier - is used to define separator on multiple matches. Separator is a simple match (string match or regex match).

Example:

numbers*=INT[',']Here, a separator string match is defined (

','). This will match zero or more integers separated by commas.45, 47, 3, 78A regex can also be specified as a separator.

fields += ID[/;|,|:/]This will match IDs separated by either

;or,or:.first, second; third, fourth: fifth -

End-of-line terminate modifier (

eolterm) - used to terminate repetition on end-of-line. By default the repetition match will span lines. When this modifier is specified, repetition will work inside the current line only.Example:

STRING*[',', eolterm]Here we have a separator as well as the

eoltermdefined. This will match zero or more strings separated by commas inside one line."first", "second", "third" "fourth"If we run the example expression once on this string, it will match the first line only.

"fourth"in the second line will not be matched.

Be aware that when eolterm modifier is used, its effect starts from the

previous match. For example:

Conditions:

'conditions' '{'

varNames+=WORD[eolterm] // match var names until end of line

'}'

In this example varNames must be matched in the same line as

conditions { because eolterm effect start immediately.

In this example we wanted to give the user the freedom to specify var names on

the next line, even to put some empty lines if he/she wishes. In order to do

that, we should modify the example like this::

Conditions:

'conditions' '{'

/\s*/

varNames+=WORD[eolterm] // match var names until end of line

'}'

Regex match /\s*/ will collect whitespaces (spaces and new-lines)

before the WORD match begins. Afterwards, repeated matches will work

inside one line only.

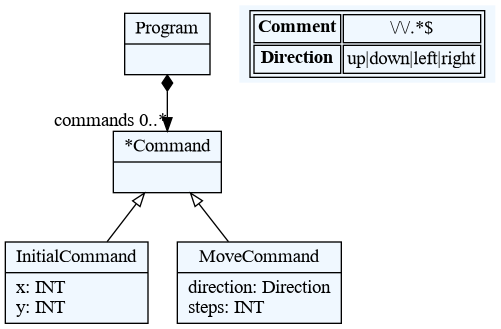

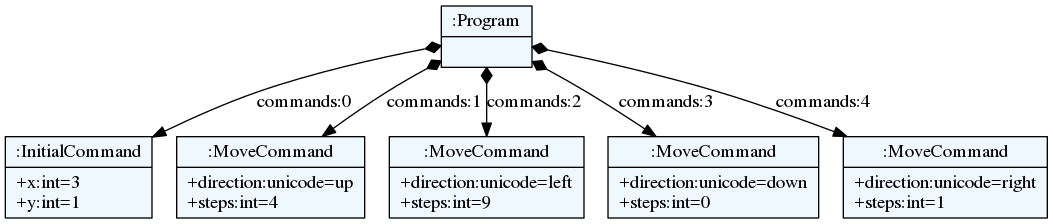

Rule types

There are three kinds of rules in textX:

- Common rules (or just rules)

- Abstract rules

- Match rules

Common rules are rules that contain at least one assignment, i.e., they have attributes defined. For example:

InitialCommand:

'initial' x=INT ',' y=INT

;

This rule has two defined attributes: x and y.

Abstract rules are rules that have no assignments and reference at least one abstract or common rule. They are usually given as an ordered choice of other rules and they are used to generalize other rules. For example:

Program:

'begin'

commands*=Command

'end'

;

Command:

MoveCommand | InitialCommand

;

In this example, Python objects in the commands list will either contain

instances of MoveCommand or InitialCommand. Command rule is abstract. A

meta-class of this rule will never be instantiated. Abstract rule can also be

used in link rule references:

ListOfCommands:

commands*=[Command][',']

;

Abstract rules may reference match rules and base types. For example:

Value:

STRING | FLOAT | BOOL | Object | Array | "null"

;

In this example, the base types as well as the string match "null" are all

match rules, but Object and Array are common rules and therefore Value is

abstract.

Abstract rules can be a complex mix of rule references and match expressions as long as there is at least one abstract or common reference. For example:

Value:

'id' /\d+-\d+/ | FLOAT | Object

;

A rule with a single reference to an abstract or common rule is also abstract:

Value:

OtherRule

;

Abstract rules can have multiple references in a single alternative with the following rules:

- If all rule references in a single alternative are match rules the result will be a concatenation of all match rule results,

- If there is a common rule reference then it would be the result and all surrounding match rules are used only for parsing

- If there are multiple common rules then the first will be used as a result and the rest only for parsing

For example:

Model: STRING | ID | '#' Rule1 Sufix; // abstract rule

Rule1: a=INT; // common rule

Prefix: '#';

Sufix: ID | SomeOtherSufix;

SomeOtherSufix: '--' '#';

In this example matching # 42 -- # at input would yield and instance of

Rule1 with attribute a set to integer 42. This comes from a third

alternative '#' Rule1 Sufix that succeeds and the # and Sufix would be

used just for parsing and the result would be discarded.

Another example:

Model: (STRING | ID | '#' Rule1) Sufix;

Rule1: a=INT; // common rule

Sufix: '--';

This is also abstract rule as we are referencing Rule1 which is a common rule

and we have no assignments. Matching # 42 -- as input will give an instance of

Rule1 with attribute a set to integer 42.

In this example:

Model: STRING|Rule1|ID|Prefix INT Sufix;

Rule1: a='a'; // a common rule

Prefix: '#';

Sufix: '--';

we see that input # 42 -- would be recognized by the last alternative and the

result will be string #42--, i.e. all match rule results would be concatenated.

But if we give a as input than the result will be an instance of Rule1 with

attribute a set to string 'a'.

In the following example we see what happens if we have multiple common rule references:

Model: STRING|Rule1|ID|Prefix Rule1 Sufix Rule2; // Reference both Rule1 and Rule2

Rule1: a=INT; // common rule

Rule2: a=STRING; // common rule

Prefix: '#';

Sufix: '--';

For input # 42 -- "some string" the model will be an instance of Rule1 with

attribute a set to 42 as it is the first common rule reference in the last

alternative (the one that succeeds) but Rule2, despite being discarded, must

also be satisfied during parsing or syntax error would be produced.

Match rules are rules that have no assignments either direct or indirect, i.e. all referenced rules are match rules too. They are usually used to specify enumerated values or some complex string matches that can't be done with regular expressions.

Examples:

Widget:

"edit"|"combo"|"checkbox"|"togglebutton"

;

Name:

STRING|/(\w|\+|-)+/

;

Value:

/(\w|\+|-)+/ | FLOAT | INT

;

These rules can be used in match references only (i.e., you can't link to these

rules as they don't exists as objects), and they produce objects of the base

python types (str, int, bool, float).

All base type rules (e.g., INT, STRING, BASETYPE) are match rules.

Rule modifiers

Rule modifiers are used for the modification of the rule's expression. They are

specified in brackets ([ ]) at the beginning of the rule's definition after

the rule's name. Currently, they are used to alter parser configuration for

whitespace handling on the rule level.

Rule modifiers act on the current rule and all rules referenced inside the rule (recursively): unless a refrenced rule has an explicit rule modifier, the currently active modifier state is propagated to referenced rules.

There are two rule modifiers at the moment:

-

skipws, noskipws - are used to enable/disable whitespace skipping during parsing. This will change the global parser's

skipwssetting given during the meta-model instantiation.Example:

Rule: 'entity' name=ID /\s*/ call=Rule2; Rule2[noskipws]: 'first' 'second';In this example

Rulerule will use default parser behaviour set during the meta-model instantiation, whileRule2rule will disable whitespace skipping. This will changeRule2to match the wordfirstsecond, but not wordsfirst secondwith whitespaces in between. -

ws - used to redefine what is considered to be a whitespaces on the rule level. textX by default treats space, tab and new-line as a whitespace characters. This can be changed globally during the meta-model instantiation (see Whitespace handling) or per rule using this modifier.

Example:

Rule: 'entity' name=ID /\s*/ call=Rule2; Rule2[ws='\n']: 'first' 'second';In this example

Rulewill use the default parser behavior but theRule2will alter the white-space definition to be new-line only. This means that the wordsfirstandsecondwill get matched only if they are on separate lines or in the same line but without other characters in between (even tabs and spaces).

Grammar comments

Syntax for comments inside a grammar is // for line comments and /* ... */

for block comments.

Language comments

To support comments in your DSL use a special grammar rule Comment. textX will

try to match this rule in between each other normal grammar match (similarly to

the whitespace matching). If the match succeeds, the matched content will be

discarded.

For example, in the robot language example comments are defined like this:

Comment:

/\/\/.*$/

;

Which states that everything starting with // and continuing until the end of

line is a comment.

Grammar modularization

Grammars can be defined in multiple files and then imported. Rules used in the references are first searched for in the current file and then in the imported files, in the order of the import.

Example:

import scheme

Library:

'library' name=Name '{'

attributes*=LibraryAttribute

scheme=Scheme

'}'

;

Scheme rule is defined in scheme.tx grammar file imported at the beginning.

Grammar files may be located in folders. In that case, dot notation is used.

Example:

import component.types

types.tx grammar is located in the component folder relatively to the

current grammar file.

If you want to override the default search order, you can specify a fully qualified name of the rule using dot notation when giving the name of the referring object.

Example:

import component.types

MyRule:

a = component.types.List

;

List:

'[' values+=BASETYPE[','] ']'

;

List from component.types is matched/instantiated and set to a attribute.

Inspecting textX grammars programmatically

Since textX is a meta-language (a language for language definition) any textual language can be specified using it, even textX grammar language itself.

This definition enable loading of textX grammar as a plain Python model which can be further analyzed for various purposes. This can be used, e.g. for tooling which need to analyze the grammar beyond of just syntactic and semantic checks (e.g. syntax highlighting may analyze grammar to discover keywords that needs to be colored).

To load grammar model first get the textX language meta-model with:

textx_mm = metamodel_for_language('textx')

and then call either grammar_model_from_str or grammar_model_from_file

method on this meta-model object:

grammar_model = textx_mm.grammar_model_from_file(

join(abspath(dirname(__file__)), 'pyflies.tx'))

Then investigate this model as you would do with any other model:

assert len(grammar_model.imports_or_references) == 3

assert len(grammar_model.rules) == 45

str_matches = get_children_of_type("SimpleMatch", grammar_model)

...

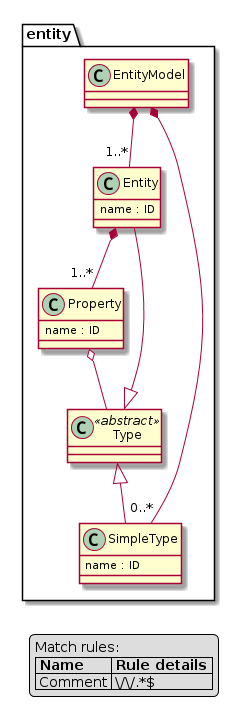

textX meta-models

textX meta-model is a Python object that knows about all classes that can be

instantiated while parsing the input. A meta-model is built from the grammar by

the functions metamodel_from_file or metamodel_from_str in the

textx.metamodel module.

from textx import metamodel_from_file

my_metamodel = metamodel_from_file('my_grammar.tx')

Each rule from the grammar will result in a Python class kept in the meta-model. Besides, meta-model knows how to parse the input strings and convert them to model.

Parsing the input and creating the model is done by model_from_file and

model_from_str methods of the meta-model object:

my_model = my_metamodel.model_from_file('some_input.md')

When parsing a model file or string a new parser is cloned for each model.

This parser can be accessed via the model attribute _tx_parser.

Custom classes

For each grammar rule a Python class with the same name is created dynamically.

These classes are instantiated during the parsing of the input string/file to

create a graph of python objects, a.k.a. model or Abstract-Syntax Tree (AST).

Most of the time dynamically created classes will be sufficient, but sometimes

you will want to use your own classes instead. To do so use parameter classes

during the meta-model instantiation. This parameter is a list of your classes

that should be named the same as the rules from the grammar which they

represent.

from textx import metamodel_from_str

grammar = '''

EntityModel:

entities+=Entity // each model has one or more entities

;

Entity:

'entity' name=ID '{'

attributes+=Attribute // each entity has one or more attributes

'}'

;

Attribute:

name=ID ':' type=[Entity] // type is a reference to an entity. There are

// built-in entities registered on the meta-model

// for primitive types (integer, string)

;

'''

class Entity:

def __init__(self, parent, name, attributes):

self.parent = parent

self.name = name

self.attributes = attributes

# Use our Entity class. "Attribute" class will be created dynamically.

entity_mm = metamodel_from_str(grammar, classes=[Entity])

Now entity_mm can be used to parse the input models where our Entity class

will be instantiated to represent each Entity rule from the grammar.

When passing a list of classes (as shown in the example above), you need to have rules for all of these classes in your grammar (else, you get an exception). Alternatively, you can also pass a callable (instead of a list of classes) to return user classes given a rule name. In that case, only rule names found in the grammar are used to query user classes. See unittest.

Constructor of the user-defined classes should accept all attributes defined by

the corresponding rule from the grammar. In the previous example, we have

provided name and attributes attributes from the Entity rule. If the class

is a child in a parent-child relationship (see the next section), then the

parent constructor parameter should also be given.

Classes that use __slots__ are supported. Also, initialization of custom

classes is postponed during model loading and done after reference resolving but

before object processors call (see Using the scope provider to modify a

model) to ensure that

immutable objects (e.g. using attr frozen

feature), that

can't be changed after initialization, are also supported.

Parent-child relationships

There is often an intrinsic parent-child relationship between object in the

model. In the previous example, each Attribute instance will always be a child

of some Entity object.

textX gives automatic support for these relationships by providing the parent

attribute on each child object.

When you navigate model each child instance will have a parent

attribute.

Always provide the parent parameter in user-defined classes for each class that is a child in a parent-child relationship.

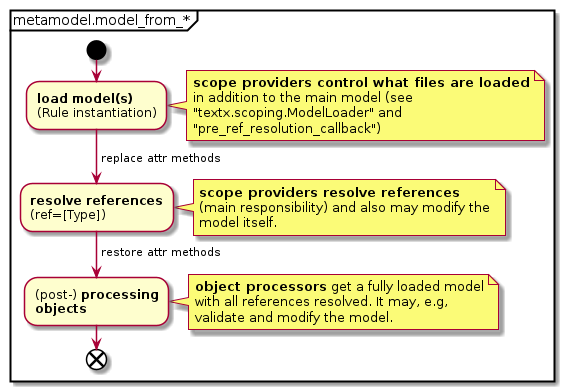

Processors

To specify static semantics of the language textX uses a concept called processor. Processors are python callables that can modify the model elements during model parsing/instantiation or do some additional checks that are not possible to do by the grammar alone.

There are two types of processors:

- model processors - are callables that are called at the end of the parsing when the whole model is instantiated. These processors accept the model and meta-model as parameters.

- object processors - are registered for particular classes (grammar rules) and are called when the objects of the given class is instantiated.

Processors can modify model/objects or raise exception (TextXSemanticError) if

some constraint is not met. User code that calls the model instantiation/parsing

can catch and handle this exception.

Model processors

To register a model processor call register_model_processor on the meta-model

instance.

from textx import metamodel_from_file

# Model processor is a callable that will accept meta-model and model as its

# parameters.

def check_some_semantics(model, metamodel):

...

... Do some check on the model and raise TextXSemanticError if the semantics

... rules are violated.

my_metamodel = metamodel_from_file('mygrammar.tx')

# Register model processor on the meta-model instance

my_metamodel.register_model_processor(check_some_semantics)

# Parse the model. check_some_semantics will be called automatically after

# a successful parse to do further checks. If the rules are not met,

# an instance of TextXSemanticError will be raised.

my_metamodel.model_from_file('some_model.ext')

Object processors

The purpose of the object processors is to validate or alter the object being constructed. They are registered per class/rule.

Let's do some additional checks for the above Entity DSL example.

def entity_obj_processor(entity):

'''

Check that Ethe ntity names are capitalized. This could also be specified

in the grammar using regex match but we will do that check here just

as an example.

'''

if entity.name != entity.name.capitalize():

raise TextXSemanticError('Entity name "%s" must be capitalized.' %

entity.name, **get_location(entity))

def attribute_obj_processor(attribute):

'''

Obj. processors can also introduce changes in the objects they process.

Here we set "primitive" attribute based on the Entity they refer to.

'''

attribute.primitive = attribute.type.name in ['integer', 'string']

# Object processors are registered by defining a map between a rule name

# and the callable that will process the instances of that rule/class.

obj_processors = {

'Entity': entity_obj_processor,

'Attribute': attribute_obj_processor,

}

# This map/dict is registered on a meta-model by the "register_obj_processors"

# call.

entity_mm.register_obj_processors(obj_processors)

# Parse the model. At each successful parse of Entity or Attribute, the registered

# processor will be called and the semantics error will be raised if the

# check does not pass.

entity_mm.model_from_file('my_entity_model.ent')

For another example of the usage of an object processor that modifies the

objects, see object processor

move_command_processor robot example.

If object processor returns a value that value will be used instead of the original object. This can be used to implement e.g. expression evaluators or on-the-fly model interpretation. For more information

For more information please take a look at the tests.

Object processors decorated with textx.textxerror_wrap will transform

any exceptions not derived from TextXError to a TextXError (including

line/column and filename information). This can be useful, if

object processors transform values using non-textx libraries (like datetime)

and you wish to get the location in the model file, where errors occur

while transforming the data (see

these tests).

Built-in objects

Often, you will need objects that should be a part of each model and you do not

want users to specify them in every model they create. The most notable example

are primitive types (e.g. integer, string, bool).

Let's provide integer and string Entities to our Entity meta-model in

order to simplify the model creation so that the users can use the names of

these two entities as the Attribute types.

class Entity:

def __init__(self, parent, name, attributes):

self.parent = parent

self.name = name

self.attributes = attributes

entity_builtins = {

'integer': Entity(None, 'integer', []),

'string': Entity(None, 'string', [])

}

entity_mm = metamodel_from_file(

'entity.tx',

classes=[Entity] # Register Entity user class,

builtins=entity_builtins # Register integer and string built-in objs

)

Now an integer and string Attribute types can be used.

See model

and

Entitiy

example for more.

Creating your own base type

Match rules by default return Python string type.

Built-in match rules (i.e. BASETYPEs) on the other hand return Python base

types.

You can use object processors to create your type by specifying match rule in the grammar and object processor for that rule that will create an object of a proper Python type.

Example:

Model:

'begin' some_number=MyFloat 'end'

;

MyFloat:

/\d+\.(\d+)?/

;

In this example MyFloat rule is a match rule and by default will return Python

string, so attribute some_number will be of string type. To change that,

register object processor for MyFloat rule:

mm = metamodel_from_str(grammar)

mm.register_obj_processors({'MyFloat': lambda x: float(x)}))

Now, MyFloat will always be converted to Python float type.

Using match filters you can override built-in rule's conversions like this:

Model:

some_float=INT

;

mm = metamodel_from_str(grammar)

mm.register_obj_processors({'INT': lambda x: float(x)}))

In this example we use built-in rule INT that returns Python int type.

Registering object processor with the key INT we can change default behaviour

and convert what is matched by this rule to some other object (float in this

case).

Auto-initialization of the attributes

Each object that is recognized in the input string will be instantiated and its attributes will be set to the values parsed from the input. In the event that a defined attribute is optional, it will nevertheless be created on the instance and set to the default value.

Here is a list of the default values for each base textX type:

- ID - empty string - ''

- INT - int - 0

- FLOAT - float - 0.0 (also 0 is possible)

- STRICTFLOAT - float - 0.0 (0. or .0 or 0e1, but not 0, which is an INT)

- BOOL - bool - False

- STRING - empty string - ''

Each attribute with zero or more multiplicity (*=) that does not match any

object from the input will be initialized to an empty list.

An attribute declared with one or more multiplicity (+=) must match at least

one object from the input and will therefore be transformed to python list

containing all matched objects.

The drawback of this auto-initialization system is that we can't be sure if the attribute was missing from the input or was matched, but the given value was the same as the default value.

In some applications it is important to distinguish between those two

situations. For that purpose, there is a parameter auto_init_attributes of the

meta-model constructor that is True by default, but can be set to False to

prevent auto-initialization from taking place.

If auto-initialization is disabled, then each optional attribute that was not

matched on the input will be set to None. This is true for the plain

assignments (=). An optional assignment (?=) will always be False if the

RHS object is not matched in the input. The multiplicity assignments (*= and

+=) will always be python lists.

Optional model parameter definitions

A meta-model can define optional model parameters. Such definitions are stored

in model_param_defs and define optional parameters, which can be specified

while loading/creating a model through model_from_str or model_from_file.

Details: see tx_model_params.

metamodel.model_param_defs can be queried (like a dict) to retrieve possible

extra parameters and their descriptions for a meta-model. It is also used to

restrict the additional parameters passed to model_from_str or

model_from_file.

Default parameters are:

project_root: this model parameter is used by theGlobalRepoto set a project directory, where all file patterns not referring to an absolute file position are looked up.

An example of a custom model parameter definition used to control the behavior

of an object processor is given in

test_reference_to_nontextx_attribute.py,

(test_object_processor_with_optional_parameter_*; specifying a parameter

while loading) and

test_reference_to_nontextx_attribute.py

(defining the parameter in the metamodel).

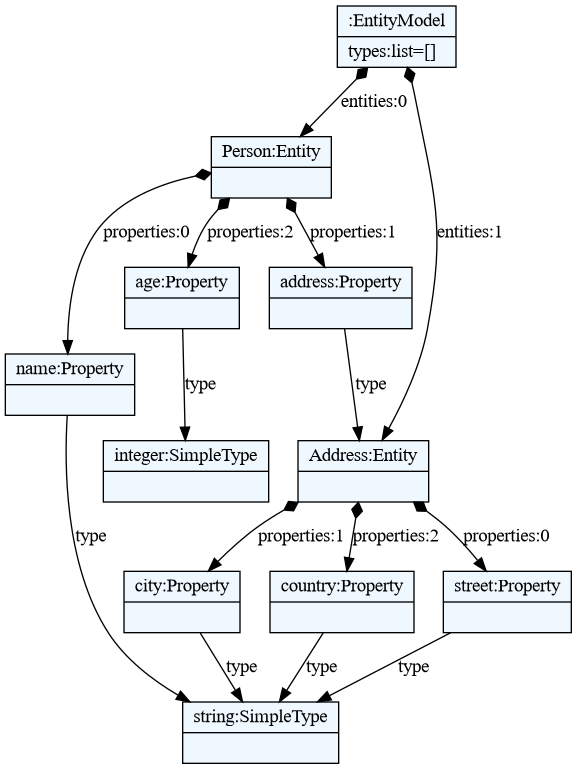

textX models

Model is a python object graph consisting of POPOs (Plain Old Python Objects) constructed from the input string that conforms to your DSL defined by the grammar and additional model and object processors.

In a sense, this structure is an Abstract Syntax Tree (AST) known from classic parsing theory, but it is actually a graph structure where each reference is resolved to a proper python reference.

Each object is an instance of a class from the meta-model. Classes are created on-the-fly from the grammar rules or are supplied by the user.

A model is created from the input string using the model_from_file and

model_from_str methods of the meta-model instance.

from textx import metamodel_from_file

my_mm = metamodel_from_file('mygrammar.tx')

# Create model

my_model = my_mm.model_from_file('some_model.ext')

The model_from_file method takes an optional argument encoding

to control the input encoding of the model file to be loaded.

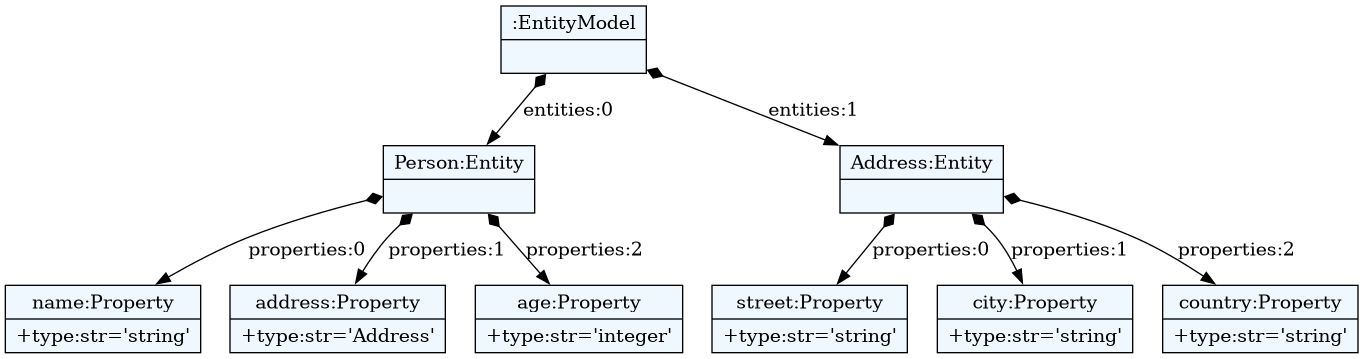

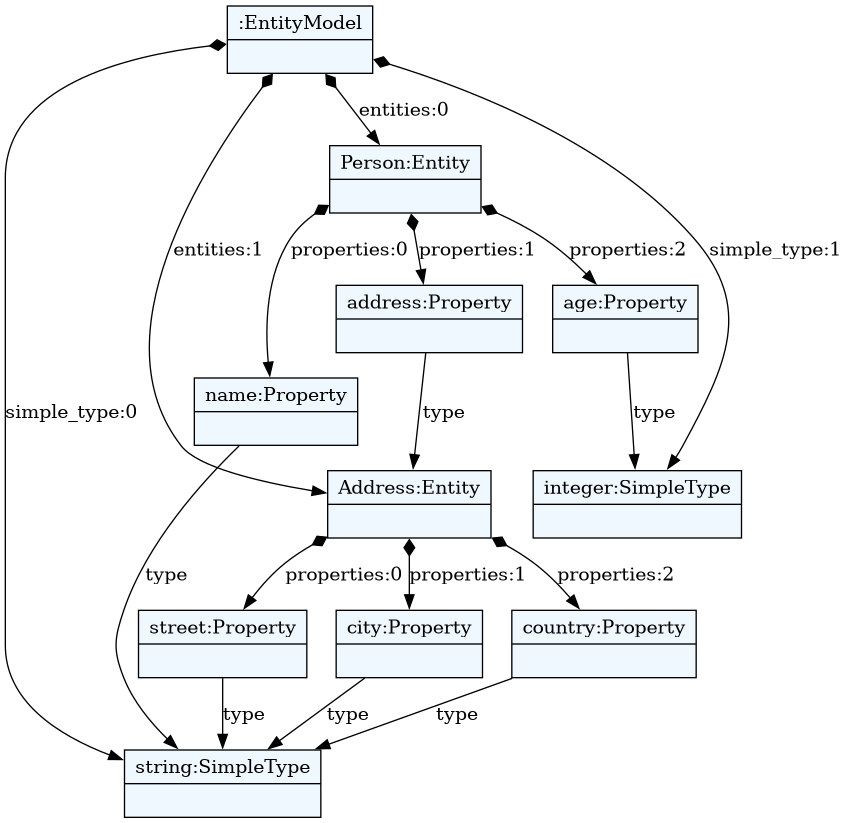

Let's take the Entity language used in Custom Classes section.

Content of entity.tx file:

EntityModel:

entities+=Entity // each model has one or more entities

;

Entity:

'entity' name=ID '{'

attributes+=Attribute // each entity has one or more attributes

'}'

;

Attribute:

name=ID ':' type=[Entity] // type is a reference to an entity. There are

// built-in entities registered on the meta-model

// for the primitive types (integer, string)

;

For the meta-model construction and built-in registration see Custom Classes and Builtins sections.

Now, we can use the entity_mm meta-model to parse and create Entity models.

person_model = entity_mm.model_from_file('person.ent')

Where person.ent file might contain this:

entity Person {

name : string

address: Address

age: integer

}

entity Address {

street : string

city : string

country : string

}

Model API

Functions given in this section can be imported from textx module.

get_model(obj)

obj (model object)

Finds the root of the model following parent references.

get_metamodel(obj)

Returns meta-model the model given with obj conforms to.

get_parent_of_type(typ, obj)

typ (str or class): the name of type of the type itself of the model object searched for.obj (model object): model object to start search from.

Finds first object up the parent chain of the given type. If no parent of the

given type exists None is returned.

get_children_of_type(typ, root, children_first=False, should_follow=lambda obj: True)

typ (str or python class): The type of the model object we are looking for.root (model object): Python model object which is the start of the search process.children_first (bool): indicates if children should be returned before their parents.should_follow (callable): a predicate used to decide if the object should be traversed.

Returns a list of all model elements of type typ starting from model element

root. The search process will follow containment links only. Non-containing

references shall not be followed.

get_children(selector, root, children_first=False, should_follow=lambda obj: True)

selector (callable): a predicate returning True if the object is of interest.root (model object): Python model object which is the start of the search process.children_first (bool): indicates if children should be returned before their parents.should_follow (callable): a predicate used to decide if the object should be traversed.

Returns a list of all selected model elements starting from model element

root. The search process will follow containment links only. Non-containing

references shall not be followed.

get_location(obj)

Returns the location of the textX model object in the form of a dict with

line/col/nchar/filename keys. nchar is a substring length of the obj in

the input string. Filename can be None if the model is loaded from a string.

Return value is convenient for use in TextX exceptions (e.g. raise TextXSemanticError('Some message', **get_location(model_obj)))

textx_isinstance(obj, cls)

Return True if obj is instance of cls taking into account textX rule/class

hierarchy. For textX created classes you can get a reference to a class from

meta-model by keying into it using the class name metamodel['SomeRule'].

Special model object's attributes

Beside attributes specified by the grammar, there are several special

attributes on model objects created by textX. All special attributes' names

start with prefix _tx.

These special attributes don't exist if the type of the resulting model object don't allow dynamic attribute creation (e.g. for Python base builtin types - str, int).

_tx_position and _tx_position_end

_tx_position attribute holds the position in the input string where the

object has been matched by the parser. Each object from the model object graph

has this attribute.

This is an absolute position in the input stream. To convert it to line/column

format use pos_to_linecol method of the parser.

line, col = entity_model._tx_parser.pos_to_linecol(

person_model.entities[0]._tx_position)

Where entity_model is a model constructed by textX.

Previous example will give the line/column position of the first entity.

_tx_position_end is the position in the input stream where the object ends.

This position is one char past the last char belonging to the object. Thus,

_tx_position_end - _tx_position == length of the object str representation.

If you need line, column and filename of a textX object (e.g. for raising

TextXSemanticError) see get_location above.

_tx_filename

This attribute exists only on the root of the model. If the model is loaded

from a file, this attribute will be the full path of the source file. If the

model is created from a string this attribute will be None.

_tx_parser

This attribute represents the concrete parser instance used for the model

(the attribute _parser of the _tx_metamodel is only a blueprint for the

parser of each model instance and cannot be used, e.g., to determine model

element positions in a file. Use the _tx_parser attribute of the model

instead).

_tx_metamodel

This attribute exists only on the root of the model. It is a reference to the meta-model object used for creating the model.

_tx_fqn

Is the fully qualified name of the grammar rule/Python class in regard to the

import path of the grammar file where the rule is defined. This attribute is

used in __repr__ of auto-generated Python classes.

_tx_model_repository

The model may have a model repository (initiated by some scope provider or by the metamodel). This object is responsible to provide and cache other model instances (see textx.scoping.providers).

_tx_model_params

This attribute always exists. It holds all additional parameters passed to

model_from_str or model_from_file of a metamodel. These parameters are

restricted by the metamodel.model_param_defs object (model and object

processors), which is

controlled by the metamodel designer.

Parser configuration

Case sensitivity

Parser is by default case sensitive. For DSLs that should be case insensitive

use ignore_case parameter of the meta-model constructor call.

from textx import metamodel_from_file

my_metamodel = metamodel_from_file('mygrammar.tx', ignore_case=True)

Whitespace handling

The parser will skip whitespaces by default. Whitespaces are spaces, tabs and

newlines by default. Skipping of the whitespaces can be disabled by skipws bool

parameter in the constructor call. Also, what is a whitespace can be redefined by

the ws string parameter.

from textx import metamodel_from_file

my_metamodel = metamodel_from_file('mygrammar.tx', skipws=False, ws='\s\n')

Whitespaces and whitespace skipping can be defined in the grammar on the level of a single rule by rule modifiers.

Automatic keywords

When designing a DSL, it is usually desirable to match keywords on word

boundaries. For example, if we have Entity grammar from the above, then a word

entity will be considered a keyword and should be matched on word boundaries

only. If we have a word entity2 in the input string at the place where

entity should be matched, the match should not succeed.

We could achieve this by using a regular expression match and the word boundaries regular expression rule for each keyword-like match.

Enitity:

/\bentity\b/ name=ID ...

But the grammar will be cumbersome to read.

textX can do automatic word boundary match for all keyword-like string matches.

To enable this feature set parameter autokwd to True in the constructor

call.

from textx import metamodel_from_file

my_metamodel = metamodel_from_file('mygrammar.tx', autokwd=True)

Any simple match from the grammar that is matched by the

regular expression [^\d\W]\w* is considered to be a keyword.

Memoization (a.k.a. packrat parsing)

This technique is based on memoizing result on each parsing expression rule. For some grammars with a lot of backtracking this can yield a significant speed increase at the expense of some memory used for the memoization cache.

Starting with textX 1.4 this feature is disabled by default. If you think that

parsing is slow, try to enable memoization by setting memoization parameter to

True during meta-model instantiation.

from textx import metamodel_from_file

my_metamodel = metamodel_from_file('mygrammar.tx', memoization=True)

textx command/tool

Executing textX related CLI commands

textX has an extensible CLI tool which is a central hub for all textX CLI commands.

When you install textX with cli dependencies (pip install textX[cli]) you get

a CLI command textx which you call to execute any of the registered

sub-commands.

textX registers several sub-commands:

check- used to check models and meta-models for syntax and semantic validitygenerate- used to call registered generators and transform given models to other target languages. This command is also used to visualize models and meta-models by generating visualizations. To see how to register your own generators head over to registration/discover section.list-languages/list-generators- used to list registered languages and generators (see the registration/discover feature for more explanations)

We eat our own dog food so all sub-commands are registered using the same mechanism and there is no distinction between the core commands provided by the textX itself and the commands provided by third-party Python packages.

Please, see Extending textx command section bellow

on how to define your own sub-commands investigate pyproject.toml of the textX

project.

Some of development commands/tools are registered by

textX-dev project which is an optional dev

dependency of textX. In order to have all these commands available you can

either install textX-dev project or install textX dev dependencies with pip install textX[dev].

Using the tool

To see all available sub-commands just call the textx:

$ textx

Usage: textx [OPTIONS] COMMAND [ARGS]...

Options:

--debug Debug/trace output.

--help Show this message and exit.

Commands:

check Check/validate model given its file path.

generate Run code generator on a provided model(s).

list-generators List all registered generators

list-languages List all registered languages

To get a help on a specific command:

$ textx check --help

Usage: textx check [OPTIONS] MODEL_FILES...

Check/validate model given its file path. If grammar is given use it to

construct the meta-model. If language is given use it to retrieve the

registered meta-model.

Examples:

# textX language is built-in, so always registered:

textx check entity.tx

# If the language is not registered you must provide the grammar:

textx check person.ent --grammar entity.tx

# or if we have language registered (see: text list-languages) it's just:

textx check person.ent

# Use "--language" if meta-model can't be deduced by file extension:

textx check person.txt --language entity

# Or to check multiple model files and deduce meta-model by extension

textx check *

Options:

--language TEXT A name of the language model conforms to.

--grammar TEXT A file name of the grammar used as a meta-model.

-i, --ignore-case Case-insensitive model parsing. Used only if "grammar" is

provided.

--help Show this message and exit.

Extending textx command

textx command can be extended from other installed Python packages using

entry

points.

Using command extension one can add new commands and command groups to the

textx command.

textx uses click library for CLI commands

processing. That makes really easy to create new commands and command groups.

To create a new command you need to provide a Python function accepting a

click command group (in this case a top level textx command) and use the

group to register additional commands using click decorators.

For example:

import click

def testcommand(textx):

@textx.command()

@click.argument('some_argument', type=click.Path())

@click.option('--some-option', default=False, is_flag=True,

help="Testing option in custom command.")

def testcommand(some_argument, some_option):

"""

This command will be found as a sub-command of `textx` command once this

project is installed.

"""

click.echo("Hello sub-command test!")

Register new command in your project's pyproject.toml file under the entry

point textx_commands (we are assuming that testcommand function is in

package cli).

[project.entry-points.textx_commands]

testcommand = "cli:testcommand"

If you install now your project in the same Python environment where textX is

installed you will see that textx command now has your command registered.

$ textx

Usage: textx [OPTIONS] COMMAND [ARGS]...

Options:

--debug Debug/trace output.

--help Show this message and exit.

Commands:

check Check validity of meta-model and optionally model.

testcommand This command will be found as a sub-command of `textx`...

visualize Generate .dot file(s) from meta-model and optionally model.

$ textx testcommand some_argument

Hello sub-command test!

Similarly you can create new command groups. You can have a group level options and a command level options and arguments.

Here is a full example:

import click

def create_testgroup(textx):

@textx.group()

@click.option('--group-option', default=False, is_flag=True,

help="Some group option.")

def testgroup(group_option):

"""Here we write group explanation."""

pass

@testgroup.command()

@click.argument('some_argument', type=click.Path())

@click.option('--some-option', default=False, is_flag=True,

help="Testing option in custom command.")

def groupcommand1(some_argument, some_option):

"""And here we write a doc for particular command."""

click.echo("GroupCommand1: argument: {}, option:{}".format(

some_argument, some_option))

@testgroup.command()

@click.argument('some_argument', type=click.Path())

@click.option('--some-option', default=False, is_flag=True,

help="Testing option in custom command.")

def groupcommand2(some_argument, some_option):

"""This is another command docs."""

click.echo("GroupCommand2: argument: {}, option:{}".format(

some_argument, some_option))

In this example we created a new group called testgroup. We use that group in

the rest of the code to decorate new commands belonging to the group.

As usual, we have to register our function in the extension point

textx_commands inside pyproject.toml:

[project.entry-points.textx_commands]

testgroup = "cli:create_testgroup"

If MyProject is installed in the environment where textX is installed you'll

see that your command group is now accessible by the textx command:

$ textx

Usage: textx [OPTIONS] COMMAND [ARGS]...

Options:

--debug Debug/trace output.

--help Show this message and exit.

Commands:

check Check validity of meta-model and optionally model.

testcommand This command will be found as a sub-command of `textx`...

testgroup Here we write group explanation.

visualize Generate .dot file(s) from meta-model and optionally model.

$ textx testgroup

Usage: textx testgroup [OPTIONS] COMMAND [ARGS]...

Here we write group explanation.

Options:

--group-option Some group option.

--help Show this message and exit.

Commands:

groupcommand1 And here we write a doc for particular command.

groupcommand2 This is another command docs.

$ textx testgroup groupcommand1 first_argument

GroupCommand1: argument: first_argument, option:False

For a full example please take a look at this test and this example test project.

For more information please see click documentation.

Registration and discovery

textX has an API for registration and discovery of languages and code

generators. This enable developing languages and generators for others to use by

simply installing from PyPI using pip.

textX utilizes a concept of extension

point to

declaratively specify the registration of language or generator. Each Python

project/package may in its pyproject.toml declare this extensions. Once a

Python package which declare the extension is installed in the environment, the

extension can be dynamically found.

To make it easier to find languages and generators on PyPI we recommend the following naming scheme for the Python packages that provide a single language or generator:

textx-lang-<language name>- for language package (e.g.textx-lang-entity)textx-gen-<source language>-<target language>- for generator package (e.g.textx-gen-entity-java)

With this scheme in place searching PyPI for all languages or generators can be

easily achieved by pip search command (e.g. pip search textx-lang to find

all languages).

textX languages

textX language consists of a meta-model which holds information of all language concepts and their relations (a.k.a. Abstract syntax) as well as a parser which knows how to transform textual representation of a language (a.k.a. Concrete syntax) to a model which conforms to the meta-model.

Also, each language has its unique name, a short one-line description and a file name pattern for recognizing files that contains textual representation of its models.

Registering a new language

To register a new language first you have to create an instance of

LanguageDesc class (or its subclass) providing the name of the language, the

file pattern of files using the language (e.g. *.ent), the description of the

language and finally a callable that should be called to get the instance of the

language meta-model. Alternatively, you can provide the instance of the

meta-model instead of the callable.

For example:

from textx import LanguageDesc

def entity_metamodel():

# Some code that constructs and return the language meta-model

# E.g. call to metamodel_from_file

entity_lang = LanguageDesc('entity',

pattern='*.ent',

description='Entity-relationship language',

metamodel=entity_metamodel)

The next step is to make this language discoverable by textX. To do this we have

to register our entity_lang object in the pyproject.toml entry point named

textx_languages.

[project.entry-points.textx_languages]

entity = "entity.metamodel:entity_lang"

In this example entity.metamodel is the Python module where entity_lang is defined.

When you install this project textX will discover your language and offer it through registration API (see bellow).

As a convenience there is a language decorator that makes creating an instance

of LanguageDesc more convenient. Use it to decorate meta-model callable and

provide the name and the file pattern as parameters. Docstring of the decorated

function will be used as a language description.

The equivalent of the previous definition using the language decorator would

be:

from textx import language

@language('entity', '*.ent')

def entity_lang():

"""

Entity-relationship language

"""

# Some code that constructs and return the language meta-model

# E.g. call to metamodel_from_file

The pyproject.toml entry point registration would be the same.

Language name is its unique identifier. There must not exist two languages

with the same name. The name consists of alphanumerics, underscores (_) and

dashes (-). If you plan to publish your language on PyPI choose a name that is

higly unlikely to collide with other languages (e.g. by using some prefix,

mycompanyname-entity instead of just entity).

Listing languages

textX provides a core command list-languages that lists all registered

languages in the current environment. We eat our own dog food so even textX

grammar language is registered as a language using the same mechanism.

$ textx list-languages

txcl (*.txcl) textx-gen-coloring A language for syntax highlight definition.

textX (*.tx) textX A meta-language for language definition